Long time ago I

published an OpenStack Networking principles, you can find it here:

Based

on the feedback I got, it's too complex and hard to digest, basically - not

written in a language that humans can understand. This motivated me to try and

explain it in a simpler way so that anyone, even Network engineers as myself,

could get it, ergo the name of the post.

OpenStack is an open

source platform that is basically composed from different Projects. Networking

Project is called Neutron. To fully understand how this all comes together, I

will cover the following concepts:

- Linux Networking

- OVS (Open Virtual Switch) Networking

- Neutron

- Why OpenStack requires SDN.

Linux Networking

In virtualization

network devices, such as Switches and NICs, are virtualized. virtual Network

Interface Card (vNIC) is a NIC equivalent for a Virtual Machine (VM).

Hypervisor can create one or more vNICs for each VM. Each vNIC is identical to

a physical NIC (VM doesn’t "know” that its not a physical server).

Switch also can be

virtualized as a virtual switch. A virtual switch works in the same way as a

physical switch, it populates the CAM table that maps different Ports to MAC

addresses. Each vNIC is connected to the vSwitch port, and these vSwitch access external physical

network through the physical NIC of Physical Server.

Before we get into

how this all comes together, we need to clarify 3 concepts:

- Linux Bridge is a virtual Switch used with KVM/QEMU hypervisor. Remember this, Bridge = L2 Switch, as simple as that.

- TAP and TUN are virtual network devices based on Linux kernel implementation. TUN works with IP packets, TAP operates with layer 2 packets like Ethernet frames.

- VETH (Virtual Ethernet pair) is created to act as virtual wiring. To connect 2 TAPs you would need a Virtual Wire, or VETH. Essentially what you are creating is a virtual equivalent of a patch cable. What goes in one end comes out the other. It can be used to connect two TAPs that belong to two VMs from different Namespaces, or to connect a Container or a VM to OVS. When VETH connects 2 TAPs, everything that goes in on one TAP goes out on another TAP.

So, why the hell are

all these concepts needed, TAP, VETH, Bridge…? These are just Linux concepts

that are used to construct the Virtual Switch and give connectivity between

VMs, and between VM and the outside world. Here is how it all works:

- When you create a Linux Bridge, you can assign TAPs to it. In order to connect the VM to this Bridge, you need to then associate the VM vNIC to one of the TAPs.

- vNIC is associated to the TAP programmatically, in software (When Linux bridge sends Ethernet frames to a TAP interface, it actually is sending the bytes to a file descriptor. Emulators like QEMU, read the bytes from this file descriptor and pass it onto the ‘guest operating system’ inside the VM, via the virtual network port on the VM. Tap interfaces are listed as part of the ifconfig Linux command, if you want to make sure everything is where it should be.)

OVS

OVS is a multilayer

virtual switch, designed to enable massive network automation through

programmatic extension. Linux Bridge can also be used as a Virtual Switch in a

Linux environment, the difference is that the Open vSwitch is targeted at

multi-server virtualization deployments where automation is used.

Open vSwitch bridge

is also used for L2 Switching, exactly like the Linux Bridge, with a pretty

important difference when it comes to Automation: it can operate in two modes:

Normal and Flow mode.

- OVS in a “normal” mode, where it acts as a normal switch, learning and populating CAM table using ARP.

- OVS in a “flow” mode is why we use OVS and not the Linux Bridge. It lets you “program the flows”, using OpenFlow, OpFlex (whatever instructions come from the SDN controller), or manually (calling ovs-ofctl add-flow). Whatever flows are installed are used and no other behavior is implied. Regardless how the flow is configured, it has MATCH and ACTION part. The match part of a flow defines what fields of a frame/packet/segment must match in order to hit the flow. You can match on most fields in the layer 2 frame, layer 3 packet or layer 4 segment. So, for example, you could match on a specific destination MAC and IP address pair, or a specific destination TCP port. The action part of a flow defines what is actually done on a message that matched against the flow. You can forward the message out a specific port, drop it, change most parts of any header, build new flows on the fly (For example to implement a form of learning), or resubmit the message to another table. Each flow is written to a specific table, and is given a specific priority. Messages enter the flow table directly into table 0. From there, each message is processed by table 0’s flows from highest to lowest priority. If the message does not match any of the flows in table 0 it is implicitly dropped (Unless an SDN controller is defined – In which case a message is sent to the controller asking what to do with the received packet).

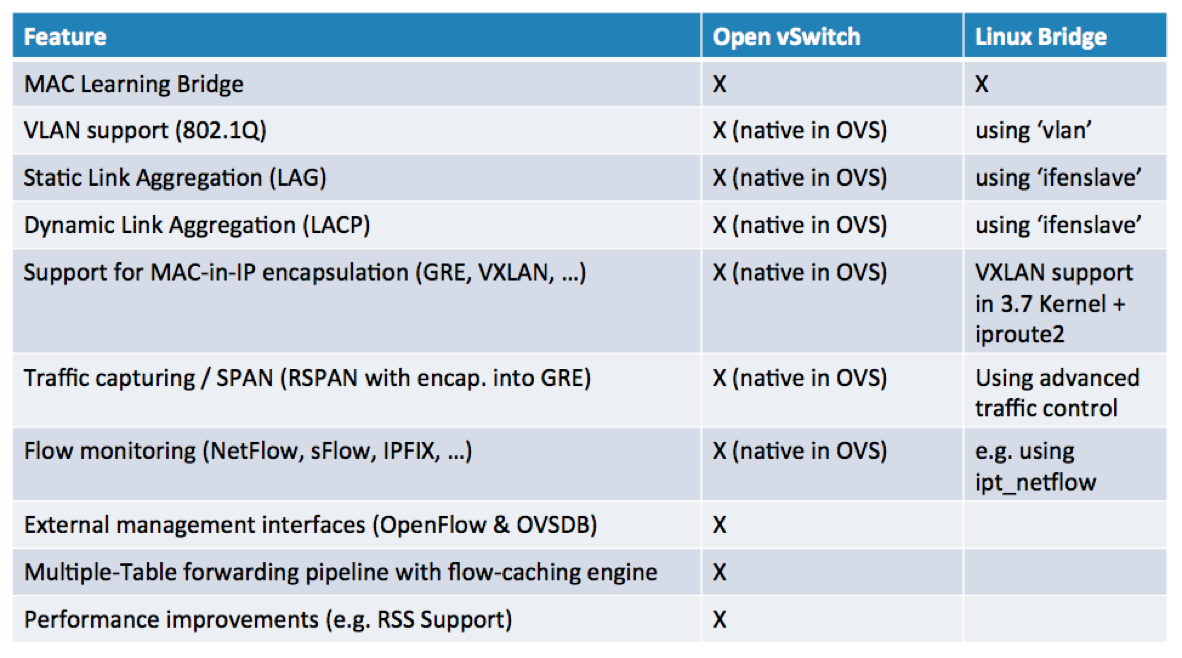

Additional

differences between the Linux Bridge and the OVS are represented in the Table

below:

Open vSwitch can

operate both as a soft switch running within the hypervisor, and as the control

stack for switching silicon (physical switch).

OpenStack Networking and Neutron

One of the mail

features that OpenStack brings to the table is Multi Tenancy. Therefore, the

entire platform needs to support a Multi Tenant architecture, including

Networking. This means that different Tenants should be allowed to use the

overlapping IP Spaces and Overlapping IP Addresses should be allowed in

different Tenants. This is enabled using the following technologies:

- Network Namespaces, which are, in a networking language, equal to the VRFs.

- Tenant Networks are owned and managed by the tenants. These networks are internal to the Tenant, and every Tenant is basically allowed to use any IP addressing space they want.

- Provider Networks are networks created by Administrators to map to physical network in data center. They are used to publish services from particular Tenants, or to allow OpenStack VMs (called Instances) to go out of the OpenStack Tenant environment.

To understand the

concept of Provider Networks, I'll explain the two types of Provider Networks,

as the only possible way of VMs to achieve the connectivity to the outside

network.

- SNAT (Source NAT) is similar to a NAT service an Office uses on a Firewall to go out to the Internet. All the VMs can use a single IP (or a group of IPs) that Admin configured when deploying OpenStack, to get to the Network outside of OpenStack environment (Internet, or a LAN network).

- Floating IP is used for publishing services. To all the VMs you need to be accessible from the outside, you will manually need to assign a Floating IP.

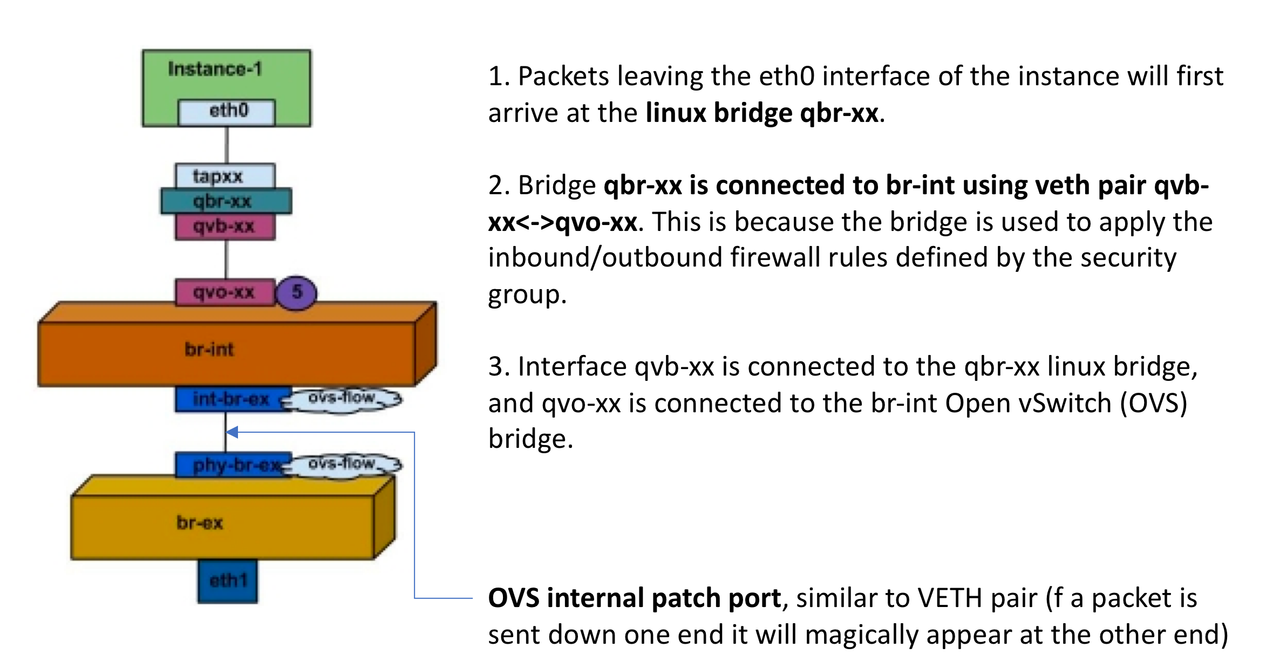

Bridges and Bridge

Mappings are a crucial concept when it comes to OpenStack Networking, it’s all

about how different BRIDGES and TAPs come together.

- br-int, integration network bridge, is used to connect the virtual machines to internal tenant networks.

- br-ext, external network bridge, is used for the connection to the PROVIDER networks, to enable connectivity to and from virtual instances. Br-ext is mapped to a Physical Network, and this is where the Floating IP and SNAT IP addresses will be assigned to the instances going out from the OpenStack via the Provider Networks.

Let's check the Data

Flow now, on an example of a single OpenStack instance (Instance-1) being

assigned a Floating IP and accessing the Public Network.

As previously

explained, a NAT is done on a br-ex, so the Floating IP is also assigned on a

br-ex, and from that point on the Instance is accessing the Public Networks

with the assigned Floating IP. In case the Floating IP has not been assigned,

the Instance is accessing the outside world using the SNAT.

Why OpenStack requires SDN

As explained before,

Neutron is an OpenStack with an API for defining network configuration. It

offers multi-tenancy with self-service. Neutron uses plugins for L2

connectivity, IP address management, L3 routing, NAT, VPN and Firewall

capabilities.

Here is why SDN is an essential requirement for any OpenStack production deployment:

- OpenStack cannot configure a physical network in accordance with it's needs to interconnect VMs in different Compute nodes.

- Neutron does the basic networking correctly. It cannot do routing the correct way, security policies, HA of the external connectivity, network performance management etc.

- OpenStack Neutron defines services for a VM provisioning within an OpenStack deployment, these services include: NAT, DHCP, Metadata, etc. All of these services have to be highly available and scalable to meet environment’s demands.

- SDN reduces Load on Neutron.

- Last, but not the least, I've never seen a production OpenStack deployment with no SDN. Just saying...